{

"cells": [

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"# Solving problems by Searching\n",

"\n",

"This notebook serves as supporting material for topics covered in **Chapter 3 - Solving Problems by Searching** and **Chapter 4 - Beyond Classical Search** from the book *Artificial Intelligence: A Modern Approach.* This notebook uses implementations from [search.py](https://github.com/aimacode/aima-python/blob/master/search.py) module. Let's start by importing everything from search module."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true,

"scrolled": true

},

"outputs": [],

"source": [

"from search import *\n",

"from notebook import psource, show_map, final_path_colors, display_visual\n",

"\n",

"# Needed to hide warnings in the matplotlib sections\n",

"import warnings\n",

"warnings.filterwarnings(\"ignore\")"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## CONTENTS\n",

"\n",

"* Overview\n",

"* Problem\n",

"* Node\n",

"* Simple Problem Solving Agent\n",

"* Search Algorithms Visualization\n",

"* Breadth-First Tree Search\n",

"* Breadth-First Search\n",

"* Best First Search\n",

"* Uniform Cost Search\n",

"* Greedy Best First Search\n",

"* A\\* Search\n",

"* Genetic Algorithm"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## OVERVIEW\n",

"\n",

"Here, we learn about problem solving. Building goal-based agents that can plan ahead to solve problems, in particular, navigation problem/route finding problem. First, we will start the problem solving by precisely defining **problems** and their **solutions**. We will look at several general-purpose search algorithms. Broadly, search algorithms are classified into two types:\n",

"\n",

"* **Uninformed search algorithms**: Search algorithms which explore the search space without having any information about the problem other than its definition.\n",

"* Examples:\n",

" 1. Breadth First Search\n",

" 2. Depth First Search\n",

" 3. Depth Limited Search\n",

" 4. Iterative Deepening Search\n",

"\n",

"\n",

"* **Informed search algorithms**: These type of algorithms leverage any information (heuristics, path cost) on the problem to search through the search space to find the solution efficiently.\n",

"* Examples:\n",

" 1. Best First Search\n",

" 2. Uniform Cost Search\n",

" 3. A\\* Search\n",

" 4. Recursive Best First Search\n",

"\n",

"*Don't miss the visualisations of these algorithms solving the route-finding problem defined on Romania map at the end of this notebook.*"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"For visualisations, we use networkx and matplotlib to show the map in the notebook and we use ipywidgets to interact with the map to see how the searching algorithm works. These are imported as required in `notebook.py`."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"%matplotlib inline\n",

"import networkx as nx\n",

"import matplotlib.pyplot as plt\n",

"from matplotlib import lines\n",

"\n",

"from ipywidgets import interact\n",

"import ipywidgets as widgets\n",

"from IPython.display import display\n",

"import time"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## PROBLEM\n",

"\n",

"Let's see how we define a Problem. Run the next cell to see how abstract class `Problem` is defined in the search module."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"psource(Problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The `Problem` class has six methods.\n",

"\n",

"* `__init__(self, initial, goal)` : This is what is called a `constructor` and is the first method called when you create an instance of the class. `initial` specifies the initial state of our search problem. It represents the start state from where our agent begins its task of exploration to find the goal state(s) which is given in the `goal` parameter.\n",

"\n",

"\n",

"* `actions(self, state)` : This method returns all the possible actions agent can execute in the given state `state`.\n",

"\n",

"\n",

"* `result(self, state, action)` : This returns the resulting state if action `action` is taken in the state `state`. This `Problem` class only deals with deterministic outcomes. So we know for sure what every action in a state would result to.\n",

"\n",

"\n",

"* `goal_test(self, state)` : Given a graph state, it checks if it is a terminal state. If the state is indeed a goal state, value of `True` is returned. Else, of course, `False` is returned.\n",

"\n",

"\n",

"* `path_cost(self, c, state1, action, state2)` : Return the cost of the path that arrives at `state2` as a result of taking `action` from `state1`, assuming total cost of `c` to get up to `state1`.\n",

"\n",

"\n",

"* `value(self, state)` : This acts as a bit of extra information in problems where we try to optimise a value when we cannot do a goal test."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## NODE\n",

"\n",

"Let's see how we define a Node. Run the next cell to see how abstract class `Node` is defined in the search module."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"psource(Node)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The `Node` class has nine methods.\n",

"\n",

"* `__init__(self, state, parent, action, path_cost)` : This method creates a node. `parent` represents the node that this is a successor of and `action` is the action required to get from the parent node to this node. `path_cost` is the cost to reach current node from parent node.\n",

"\n",

"* `__repr__(self)` : This returns the state of this node.\n",

"\n",

"* `__lt__(self, node)` : Given a `node`, this method returns `True` if the state of current node is less than the state of the `node`. Otherwise it returns `False`.\n",

"\n",

"* `expand(self, problem)` : This method lists all the neighbouring(reachable in one step) nodes of current node. \n",

"\n",

"* `child_node(self, problem, action)` : Given an `action`, this method returns the immediate neighbour that can be reached with that `action`.\n",

"\n",

"* `solution(self)` : This returns the sequence of actions required to reach this node from the root node. \n",

"\n",

"* `path(self)` : This returns a list of all the nodes that lies in the path from the root to this node.\n",

"\n",

"* `__eq__(self, other)` : This method returns `True` if the state of current node is equal to the other node. Else it returns `False`.\n",

"\n",

"* `__hash__(self)` : This returns the hash of the state of current node."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will use the abstract class `Problem` to define our real **problem** named `GraphProblem`. You can see how we define `GraphProblem` by running the next cell."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"psource(GraphProblem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now it's time to define our problem. We will define it by passing `initial`, `goal`, `graph` to `GraphProblem`. So, our problem is to find the goal state starting from the given initial state on the provided graph. Have a look at our romania_map, which is an Undirected Graph containing a dict of nodes as keys and neighbours as values."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"romania_map = UndirectedGraph(dict(\n",

" Arad=dict(Zerind=75, Sibiu=140, Timisoara=118),\n",

" Bucharest=dict(Urziceni=85, Pitesti=101, Giurgiu=90, Fagaras=211),\n",

" Craiova=dict(Drobeta=120, Rimnicu=146, Pitesti=138),\n",

" Drobeta=dict(Mehadia=75),\n",

" Eforie=dict(Hirsova=86),\n",

" Fagaras=dict(Sibiu=99),\n",

" Hirsova=dict(Urziceni=98),\n",

" Iasi=dict(Vaslui=92, Neamt=87),\n",

" Lugoj=dict(Timisoara=111, Mehadia=70),\n",

" Oradea=dict(Zerind=71, Sibiu=151),\n",

" Pitesti=dict(Rimnicu=97),\n",

" Rimnicu=dict(Sibiu=80),\n",

" Urziceni=dict(Vaslui=142)))\n",

"\n",

"romania_map.locations = dict(\n",

" Arad=(91, 492), Bucharest=(400, 327), Craiova=(253, 288),\n",

" Drobeta=(165, 299), Eforie=(562, 293), Fagaras=(305, 449),\n",

" Giurgiu=(375, 270), Hirsova=(534, 350), Iasi=(473, 506),\n",

" Lugoj=(165, 379), Mehadia=(168, 339), Neamt=(406, 537),\n",

" Oradea=(131, 571), Pitesti=(320, 368), Rimnicu=(233, 410),\n",

" Sibiu=(207, 457), Timisoara=(94, 410), Urziceni=(456, 350),\n",

" Vaslui=(509, 444), Zerind=(108, 531))"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"It is pretty straightforward to understand this `romania_map`. The first node **Arad** has three neighbours named **Zerind**, **Sibiu**, **Timisoara**. Each of these nodes are 75, 140, 118 units apart from **Arad** respectively. And the same goes with other nodes.\n",

"\n",

"And `romania_map.locations` contains the positions of each of the nodes. We will use the straight line distance (which is different from the one provided in `romania_map`) between two cities in algorithms like A\\*-search and Recursive Best First Search.\n",

"\n",

"**Define a problem:**\n",

"Hmm... say we want to start exploring from **Arad** and try to find **Bucharest** in our romania_map. So, this is how we do it."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Romania Map Visualisation\n",

"\n",

"Let's have a visualisation of Romania map [Figure 3.2] from the book and see how different searching algorithms perform / how frontier expands in each search algorithm for a simple problem named `romania_problem`."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Have a look at `romania_locations`. It is a dictionary defined in search module. We will use these location values to draw the romania graph using **networkx**."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"romania_locations = romania_map.locations\n",

"print(romania_locations)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's get started by initializing an empty graph. We will add nodes, place the nodes in their location as shown in the book, add edges to the graph."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# node colors, node positions and node label positions\n",

"node_colors = {node: 'white' for node in romania_map.locations.keys()}\n",

"node_positions = romania_map.locations\n",

"node_label_pos = { k:[v[0],v[1]-10] for k,v in romania_map.locations.items() }\n",

"edge_weights = {(k, k2) : v2 for k, v in romania_map.graph_dict.items() for k2, v2 in v.items()}\n",

"\n",

"romania_graph_data = { 'graph_dict' : romania_map.graph_dict,\n",

" 'node_colors': node_colors,\n",

" 'node_positions': node_positions,\n",

" 'node_label_positions': node_label_pos,\n",

" 'edge_weights': edge_weights\n",

" }"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We have completed building our graph based on romania_map and its locations. It's time to display it here in the notebook. This function `show_map(node_colors)` helps us do that. We will be calling this function later on to display the map at each and every interval step while searching, using variety of algorithms from the book."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can simply call the function with node_colors dictionary object to display it."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"show_map(romania_graph_data)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Voila! You see, the romania map as shown in the Figure[3.2] in the book. Now, see how different searching algorithms perform with our problem statements."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## SIMPLE PROBLEM SOLVING AGENT PROGRAM\n",

"\n",

"Let us now define a Simple Problem Solving Agent Program. Run the next cell to see how the abstract class `SimpleProblemSolvingAgentProgram` is defined in the search module."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"psource(SimpleProblemSolvingAgentProgram)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The SimpleProblemSolvingAgentProgram class has six methods: \n",

"\n",

"* `__init__(self, intial_state=None)`: This is the `contructor` of the class and is the first method to be called when the class is instantiated. It takes in a keyword argument, `initial_state` which is initially `None`. The argument `intial_state` represents the state from which the agent starts.\n",

"\n",

"* `__call__(self, percept)`: This method updates the `state` of the agent based on its `percept` using the `update_state` method. It then formulates a `goal` with the help of `formulate_goal` method and a `problem` using the `formulate_problem` method and returns a sequence of actions to solve it (using the `search` method).\n",

"\n",

"* `update_state(self, percept)`: This method updates the `state` of the agent based on its `percept`.\n",

"\n",

"* `formulate_goal(self, state)`: Given a `state` of the agent, this method formulates the `goal` for it.\n",

"\n",

"* `formulate_problem(self, state, goal)`: It is used in problem formulation given a `state` and a `goal` for the `agent`.\n",

"\n",

"* `search(self, problem)`: This method is used to search a sequence of `actions` to solve a `problem`."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let us now define a Simple Problem Solving Agent Program. We will create a simple `vacuumAgent` class which will inherit from the abstract class `SimpleProblemSolvingAgentProgram` and overrides its methods. We will create a simple intelligent vacuum agent which can be in any one of the following states. It will move to any other state depending upon the current state as shown in the picture by arrows:\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"class vacuumAgent(SimpleProblemSolvingAgentProgram):\n",

" def update_state(self, state, percept):\n",

" return percept\n",

"\n",

" def formulate_goal(self, state):\n",

" goal = [state7, state8]\n",

" return goal \n",

"\n",

" def formulate_problem(self, state, goal):\n",

" problem = state\n",

" return problem \n",

" \n",

" def search(self, problem):\n",

" if problem == state1:\n",

" seq = [\"Suck\", \"Right\", \"Suck\"]\n",

" elif problem == state2:\n",

" seq = [\"Suck\", \"Left\", \"Suck\"]\n",

" elif problem == state3:\n",

" seq = [\"Right\", \"Suck\"]\n",

" elif problem == state4:\n",

" seq = [\"Suck\"]\n",

" elif problem == state5:\n",

" seq = [\"Suck\"]\n",

" elif problem == state6:\n",

" seq = [\"Left\", \"Suck\"]\n",

" return seq"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now, we will define all the 8 states and create an object of the above class. Then, we will pass it different states and check the output:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"state1 = [(0, 0), [(0, 0), \"Dirty\"], [(1, 0), [\"Dirty\"]]]\n",

"state2 = [(1, 0), [(0, 0), \"Dirty\"], [(1, 0), [\"Dirty\"]]]\n",

"state3 = [(0, 0), [(0, 0), \"Clean\"], [(1, 0), [\"Dirty\"]]]\n",

"state4 = [(1, 0), [(0, 0), \"Clean\"], [(1, 0), [\"Dirty\"]]]\n",

"state5 = [(0, 0), [(0, 0), \"Dirty\"], [(1, 0), [\"Clean\"]]]\n",

"state6 = [(1, 0), [(0, 0), \"Dirty\"], [(1, 0), [\"Clean\"]]]\n",

"state7 = [(0, 0), [(0, 0), \"Clean\"], [(1, 0), [\"Clean\"]]]\n",

"state8 = [(1, 0), [(0, 0), \"Clean\"], [(1, 0), [\"Clean\"]]]\n",

"\n",

"a = vacuumAgent(state1)\n",

"\n",

"print(a(state6)) \n",

"print(a(state1))\n",

"print(a(state3))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## SEARCHING ALGORITHMS VISUALIZATION\n",

"\n",

"In this section, we have visualizations of the following searching algorithms:\n",

"\n",

"1. Breadth First Tree Search\n",

"2. Depth First Tree Search\n",

"3. Breadth First Search\n",

"4. Depth First Graph Search\n",

"5. Best First Graph Search\n",

"6. Uniform Cost Search\n",

"7. Depth Limited Search\n",

"8. Iterative Deepening Search\n",

"9. A\\*-Search\n",

"10. Recursive Best First Search\n",

"\n",

"We add the colors to the nodes to have a nice visualisation when displaying. So, these are the different colors we are using in these visuals:\n",

"* Un-explored nodes - white\n",

"* Frontier nodes - orange\n",

"* Currently exploring node - red\n",

"* Already explored nodes - gray"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 1. BREADTH-FIRST TREE SEARCH\n",

"\n",

"We have a working implementation in search module. But as we want to interact with the graph while it is searching, we need to modify the implementation. Here's the modified breadth first tree search."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def tree_search_for_vis(problem, frontier):\n",

" \"\"\"Search through the successors of a problem to find a goal.\n",

" The argument frontier should be an empty queue.\n",

" Don't worry about repeated paths to a state. [Figure 3.7]\"\"\"\n",

" \n",

" # we use these two variables at the time of visualisations\n",

" iterations = 0\n",

" all_node_colors = []\n",

" node_colors = {k : 'white' for k in problem.graph.nodes()}\n",

" \n",

" #Adding first node to the queue\n",

" frontier.append(Node(problem.initial))\n",

" \n",

" node_colors[Node(problem.initial).state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" while frontier:\n",

" #Popping first node of queue\n",

" node = frontier.pop()\n",

" \n",

" # modify the currently searching node to red\n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" if problem.goal_test(node.state):\n",

" # modify goal node to green after reaching the goal\n",

" node_colors[node.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, node)\n",

" \n",

" frontier.extend(node.expand(problem))\n",

" \n",

" for n in node.expand(problem):\n",

" node_colors[n.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

"\n",

" # modify the color of explored nodes to gray\n",

" node_colors[node.state] = \"gray\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" return None\n",

"\n",

"def breadth_first_tree_search(problem):\n",

" \"Search the shallowest nodes in the search tree first.\"\n",

" iterations, all_node_colors, node = tree_search_for_vis(problem, FIFOQueue())\n",

" return(iterations, all_node_colors, node)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Now, we use `ipywidgets` to display a slider, a button and our romania map. By sliding the slider we can have a look at all the intermediate steps of a particular search algorithm. By pressing the button **Visualize**, you can see all the steps without interacting with the slider. These two helper functions are the callback functions which are called when we interact with the slider and the button."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Fagaras', romania_map)\n",

"a, b, c = breadth_first_tree_search(romania_problem)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=breadth_first_tree_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 2. Depth-First Tree Search:\n",

"Now let's discuss another searching algorithm, Depth-First Tree Search."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def depth_first_tree_search(problem):\n",

" \"Search the deepest nodes in the search tree first.\"\n",

" # This algorithm might not work in case of repeated paths\n",

" # and may run into an infinite while loop.\n",

" iterations, all_node_colors, node = tree_search_for_vis(problem, Stack())\n",

" return(iterations, all_node_colors, node)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Oradea', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=depth_first_tree_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"## 3. BREADTH-FIRST GRAPH SEARCH\n",

"\n",

"Let's change all the `node_colors` to starting position and define a different problem statement."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def breadth_first_search(problem):\n",

" \"[Figure 3.11]\"\n",

" \n",

" # we use these two variables at the time of visualisations\n",

" iterations = 0\n",

" all_node_colors = []\n",

" node_colors = {k : 'white' for k in problem.graph.nodes()}\n",

" \n",

" node = Node(problem.initial)\n",

" \n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" if problem.goal_test(node.state):\n",

" node_colors[node.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, node)\n",

" \n",

" frontier = FIFOQueue()\n",

" frontier.append(node)\n",

" \n",

" # modify the color of frontier nodes to blue\n",

" node_colors[node.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" explored = set()\n",

" while frontier:\n",

" node = frontier.pop()\n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" explored.add(node.state) \n",

" \n",

" for child in node.expand(problem):\n",

" if child.state not in explored and child not in frontier:\n",

" if problem.goal_test(child.state):\n",

" node_colors[child.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, child)\n",

" frontier.append(child)\n",

"\n",

" node_colors[child.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" node_colors[node.state] = \"gray\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return None"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=breadth_first_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 4. Depth-First Graph Search: \n",

"Although we have a working implementation in search module, we have to make a few changes in the algorithm to make it suitable for visualization."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def graph_search_for_vis(problem, frontier):\n",

" \"\"\"Search through the successors of a problem to find a goal.\n",

" The argument frontier should be an empty queue.\n",

" If two paths reach a state, only use the first one. [Figure 3.7]\"\"\"\n",

" # we use these two variables at the time of visualisations\n",

" iterations = 0\n",

" all_node_colors = []\n",

" node_colors = {k : 'white' for k in problem.graph.nodes()}\n",

" \n",

" frontier.append(Node(problem.initial))\n",

" explored = set()\n",

" \n",

" # modify the color of frontier nodes to orange\n",

" node_colors[Node(problem.initial).state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" while frontier:\n",

" # Popping first node of queue\n",

" node = frontier.pop()\n",

" \n",

" # modify the currently searching node to red\n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" if problem.goal_test(node.state):\n",

" # modify goal node to green after reaching the goal\n",

" node_colors[node.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, node)\n",

" \n",

" explored.add(node.state)\n",

" frontier.extend(child for child in node.expand(problem)\n",

" if child.state not in explored and\n",

" child not in frontier)\n",

" \n",

" for n in frontier:\n",

" # modify the color of frontier nodes to orange\n",

" node_colors[n.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

"\n",

" # modify the color of explored nodes to gray\n",

" node_colors[node.state] = \"gray\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" return None\n",

"\n",

"\n",

"def depth_first_graph_search(problem):\n",

" \"\"\"Search the deepest nodes in the search tree first.\"\"\"\n",

" iterations, all_node_colors, node = graph_search_for_vis(problem, Stack())\n",

" return(iterations, all_node_colors, node)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=depth_first_graph_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 5. BEST FIRST SEARCH\n",

"\n",

"Let's change all the `node_colors` to starting position and define a different problem statement."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def best_first_graph_search_for_vis(problem, f):\n",

" \"\"\"Search the nodes with the lowest f scores first.\n",

" You specify the function f(node) that you want to minimize; for example,\n",

" if f is a heuristic estimate to the goal, then we have greedy best\n",

" first search; if f is node.depth then we have breadth-first search.\n",

" There is a subtlety: the line \"f = memoize(f, 'f')\" means that the f\n",

" values will be cached on the nodes as they are computed. So after doing\n",

" a best first search you can examine the f values of the path returned.\"\"\"\n",

" \n",

" # we use these two variables at the time of visualisations\n",

" iterations = 0\n",

" all_node_colors = []\n",

" node_colors = {k : 'white' for k in problem.graph.nodes()}\n",

" \n",

" f = memoize(f, 'f')\n",

" node = Node(problem.initial)\n",

" \n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" if problem.goal_test(node.state):\n",

" node_colors[node.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, node)\n",

" \n",

" frontier = PriorityQueue(min, f)\n",

" frontier.append(node)\n",

" \n",

" node_colors[node.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" explored = set()\n",

" while frontier:\n",

" node = frontier.pop()\n",

" \n",

" node_colors[node.state] = \"red\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" \n",

" if problem.goal_test(node.state):\n",

" node_colors[node.state] = \"green\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return(iterations, all_node_colors, node)\n",

" \n",

" explored.add(node.state)\n",

" for child in node.expand(problem):\n",

" if child.state not in explored and child not in frontier:\n",

" frontier.append(child)\n",

" node_colors[child.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" elif child in frontier:\n",

" incumbent = frontier[child]\n",

" if f(child) < f(incumbent):\n",

" del frontier[incumbent]\n",

" frontier.append(child)\n",

" node_colors[child.state] = \"orange\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

"\n",

" node_colors[node.state] = \"gray\"\n",

" iterations += 1\n",

" all_node_colors.append(dict(node_colors))\n",

" return None"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 6. UNIFORM COST SEARCH\n",

"\n",

"Let's change all the `node_colors` to starting position and define a different problem statement."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def uniform_cost_search(problem):\n",

" \"[Figure 3.14]\"\n",

" #Uniform Cost Search uses Best First Search algorithm with f(n) = g(n)\n",

" iterations, all_node_colors, node = best_first_graph_search_for_vis(problem, lambda node: node.path_cost)\n",

" return(iterations, all_node_colors, node)\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=uniform_cost_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## GREEDY BEST FIRST SEARCH\n",

"Let's change all the node_colors to starting position and define a different problem statement."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def greedy_best_first_search(problem, h=None):\n",

" \"\"\"Greedy Best-first graph search is an informative searching algorithm with f(n) = h(n).\n",

" You need to specify the h function when you call best_first_search, or\n",

" else in your Problem subclass.\"\"\"\n",

" h = memoize(h or problem.h, 'h')\n",

" iterations, all_node_colors, node = best_first_graph_search_for_vis(problem, lambda n: h(n))\n",

" return(iterations, all_node_colors, node)\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=greedy_best_first_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## 9. A\\* SEARCH\n",

"\n",

"Let's change all the `node_colors` to starting position and define a different problem statement."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def astar_search(problem, h=None):\n",

" \"\"\"A* search is best-first graph search with f(n) = g(n)+h(n).\n",

" You need to specify the h function when you call astar_search, or\n",

" else in your Problem subclass.\"\"\"\n",

" h = memoize(h or problem.h, 'h')\n",

" iterations, all_node_colors, node = best_first_graph_search_for_vis(problem, \n",

" lambda n: n.path_cost + h(n))\n",

" return(iterations, all_node_colors, node)\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"all_node_colors = []\n",

"romania_problem = GraphProblem('Arad', 'Bucharest', romania_map)\n",

"display_visual(romania_graph_data, user_input=False, \n",

" algorithm=astar_search, \n",

" problem=romania_problem)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": false

},

"outputs": [],

"source": [

"all_node_colors = []\n",

"# display_visual(romania_graph_data, user_input=True, algorithm=breadth_first_tree_search)\n",

"algorithms = { \"Breadth First Tree Search\": breadth_first_tree_search,\n",

" \"Depth First Tree Search\": depth_first_tree_search,\n",

" \"Breadth First Search\": breadth_first_search,\n",

" \"Depth First Graph Search\": depth_first_graph_search,\n",

" \"Uniform Cost Search\": uniform_cost_search,\n",

" \"A-star Search\": astar_search}\n",

"display_visual(romania_graph_data, algorithm=algorithms, user_input=True)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## A* Heuristics\n",

"\n",

"Different heuristics provide different efficiency in solving A* problems which are generally defined by the number of explored nodes as well as the branching factor. With the classic 8 puzzle we can show the efficiency of different heuristics through the number of explored nodes.\n",

"\n",

"### 8 Puzzle Problem\n",

"\n",

"The *8 Puzzle Problem* consists of a 3x3 tray in which the goal is to get the initial configuration to the goal state by shifting the numbered tiles into the blank space.\n",

"\n",

"example:- \n",

"\n",

" Initial State Goal State\n",

" | 7 | 2 | 4 | | 0 | 1 | 2 |\n",

" | 5 | 0 | 6 | | 3 | 4 | 5 |\n",

" | 8 | 3 | 1 | | 6 | 7 | 8 |\n",

" \n",

"We have a total of 9 blank tiles giving us a total of 9! initial configuration but not all of these are solvable. The solvability of a configuration can be checked by calculating the Inversion Permutation. If the total Inversion Permutation is even then the initial configuration is solvable else the initial configuration is not solvable which means that only 9!/2 initial states lead to a solution.\n",

"\n",

"#### Heuristics :-\n",

"\n",

"1) Manhattan Distance:- For the 8 puzzle problem Manhattan distance is defined as the distance of a tile from its goal state( for the tile numbered '1' in the initial configuration Manhattan distance is 4 \"2 for left and 2 for upward displacement\").\n",

"\n",

"2) No. of Misplaced Tiles:- The heuristic calculates the number of misplaced tiles between the current state and goal state.\n",

"\n",

"3) Sqrt of Manhattan Distance:- It calculates the square root of Manhattan distance.\n",

"\n",

"4) Max Heuristic:- It assign the score as the maximum between \"Manhattan Distance\" and \"No. of Misplaced Tiles\". "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# Heuristics for 8 Puzzle Problem\n",

"\n",

"def linear(state,goal):\n",

" return sum([1 if state[i] != goal[i] else 0 for i in range(8)])\n",

"\n",

"def manhanttan(state,goal):\n",

" index_goal = {0:[2,2], 1:[0,0], 2:[0,1], 3:[0,2], 4:[1,0], 5:[1,1], 6:[1,2], 7:[2,0], 8:[2,1]}\n",

" index_state = {}\n",

" index = [[0,0], [0,1], [0,2], [1,0], [1,1], [1,2], [2,0], [2,1], [2,2]]\n",

" x, y = 0, 0\n",

" \n",

" for i in range(len(state)):\n",

" index_state[state[i]] = index[i]\n",

" \n",

" mhd = 0\n",

" \n",

" for i in range(8):\n",

" for j in range(2):\n",

" mhd = abs(index_goal[i][j] - index_state[i][j]) + mhd\n",

" \n",

" return mhd\n",

"\n",

"def sqrt_manhanttan(state,goal):\n",

" index_goal = {0:[2,2], 1:[0,0], 2:[0,1], 3:[0,2], 4:[1,0], 5:[1,1], 6:[1,2], 7:[2,0], 8:[2,1]}\n",

" index_state = {}\n",

" index = [[0,0], [0,1], [0,2], [1,0], [1,1], [1,2], [2,0], [2,1], [2,2]]\n",

" x, y = 0, 0\n",

" \n",

" for i in range(len(state)):\n",

" index_state[state[i]] = index[i]\n",

" \n",

" mhd = 0\n",

" \n",

" for i in range(8):\n",

" for j in range(2):\n",

" mhd = (index_goal[i][j] - index_state[i][j])**2 + mhd\n",

" \n",

" return math.sqrt(mhd)\n",

"\n",

"def max_heuristic(state,goal):\n",

" score1 = manhanttan(state, goal)\n",

" score2 = linear(state, goal)\n",

" return max(score1, score2)"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# Solving the puzzle \n",

"puzzle = EightPuzzle()\n",

"puzzle.checkSolvability([2,4,3,1,5,6,7,8,0]) # checks whether the initialized configuration is solvable or not\n",

"puzzle.solve([2,4,3,1,5,6,7,8,0], [1,2,3,4,5,6,7,8,0],max_heuristic) # Max_heuristic\n",

"puzzle.solve([2,4,3,1,5,6,7,8,0], [1,2,3,4,5,6,7,8,0],linear) # Linear\n",

"puzzle.solve([2,4,3,1,5,6,7,8,0], [1,2,3,4,5,6,7,8,0],manhanttan) # Manhattan\n",

"puzzle.solve([2,4,3,1,5,6,7,8,0], [1,2,3,4,5,6,7,8,0],sqrt_manhanttan) # Sqrt_manhattan"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## HILL CLIMBING\n",

"\n",

"Hill Climbing is a heuristic search used for optimization problems.\n",

"Given a large set of inputs and a good heuristic function, it tries to find a sufficiently good solution to the problem. \n",

"This solution may or may not be the global optimum.\n",

"The algorithm is a variant of generate and test algorithm. \n",

"

\n",

"As a whole, the algorithm works as follows:\n",

"- Evaluate the initial state.\n",

"- If it is equal to the goal state, return.\n",

"- Find a neighboring state (one which is heuristically similar to the current state)\n",

"- Evaluate this state. If it is closer to the goal state than before, replace the initial state with this state and repeat these steps.\n",

"

"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(hill_climbing)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will find an approximate solution to the traveling salespersons problem using this algorithm.\n",

"

\n",

"We need to define a class for this problem.\n",

"

\n",

"`Problem` will be used as a base class."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"class TSP_problem(Problem):\n",

"\n",

" \"\"\" subclass of Problem to define various functions \"\"\"\n",

"\n",

" def two_opt(self, state):\n",

" \"\"\" Neighbour generating function for Traveling Salesman Problem \"\"\"\n",

" neighbour_state = state[:]\n",

" left = random.randint(0, len(neighbour_state) - 1)\n",

" right = random.randint(0, len(neighbour_state) - 1)\n",

" if left > right:\n",

" left, right = right, left\n",

" neighbour_state[left: right + 1] = reversed(neighbour_state[left: right + 1])\n",

" return neighbour_state\n",

"\n",

" def actions(self, state):\n",

" \"\"\" action that can be excuted in given state \"\"\"\n",

" return [self.two_opt]\n",

"\n",

" def result(self, state, action):\n",

" \"\"\" result after applying the given action on the given state \"\"\"\n",

" return action(state)\n",

"\n",

" def path_cost(self, c, state1, action, state2):\n",

" \"\"\" total distance for the Traveling Salesman to be covered if in state2 \"\"\"\n",

" cost = 0\n",

" for i in range(len(state2) - 1):\n",

" cost += distances[state2[i]][state2[i + 1]]\n",

" cost += distances[state2[0]][state2[-1]]\n",

" return cost\n",

"\n",

" def value(self, state):\n",

" \"\"\" value of path cost given negative for the given state \"\"\"\n",

" return -1 * self.path_cost(None, None, None, state)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will use cities from the Romania map as our cities for this problem.\n",

"

\n",

"A list of all cities and a dictionary storing distances between them will be populated."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"distances = {}\n",

"all_cities = []\n",

"\n",

"for city in romania_map.locations.keys():\n",

" distances[city] = {}\n",

" all_cities.append(city)\n",

" \n",

"all_cities.sort()\n",

"print(all_cities)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Next, we need to populate the individual lists inside the dictionary with the manhattan distance between the cities."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"import numpy as np\n",

"for name_1, coordinates_1 in romania_map.locations.items():\n",

" for name_2, coordinates_2 in romania_map.locations.items():\n",

" distances[name_1][name_2] = np.linalg.norm(\n",

" [coordinates_1[0] - coordinates_2[0], coordinates_1[1] - coordinates_2[1]])\n",

" distances[name_2][name_1] = np.linalg.norm(\n",

" [coordinates_1[0] - coordinates_2[0], coordinates_1[1] - coordinates_2[1]])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The way neighbours are chosen currently isn't suitable for the travelling salespersons problem.\n",

"We need a neighboring state that is similar in total path distance to the current state.\n",

"

\n",

"We need to change the function that finds neighbors."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def hill_climbing(problem):\n",

" \n",

" \"\"\"From the initial node, keep choosing the neighbor with highest value,\n",

" stopping when no neighbor is better. [Figure 4.2]\"\"\"\n",

" \n",

" def find_neighbors(state, number_of_neighbors=100):\n",

" \"\"\" finds neighbors using two_opt method \"\"\"\n",

" \n",

" neighbors = []\n",

" \n",

" for i in range(number_of_neighbors):\n",

" new_state = problem.two_opt(state)\n",

" neighbors.append(Node(new_state))\n",

" state = new_state\n",

" \n",

" return neighbors\n",

"\n",

" # as this is a stochastic algorithm, we will set a cap on the number of iterations\n",

" iterations = 10000\n",

" \n",

" current = Node(problem.initial)\n",

" while iterations:\n",

" neighbors = find_neighbors(current.state)\n",

" if not neighbors:\n",

" break\n",

" neighbor = argmax_random_tie(neighbors,\n",

" key=lambda node: problem.value(node.state))\n",

" if problem.value(neighbor.state) <= problem.value(current.state):\n",

" current.state = neighbor.state\n",

" iterations -= 1\n",

" \n",

" return current.state"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"An instance of the TSP_problem class will be created."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"tsp = TSP_problem(all_cities)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We can now generate an approximate solution to the problem by calling `hill_climbing`.\n",

"The results will vary a bit each time you run it."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"hill_climbing(tsp)"

]

},

{

"cell_type": "markdown",

"metadata": {},

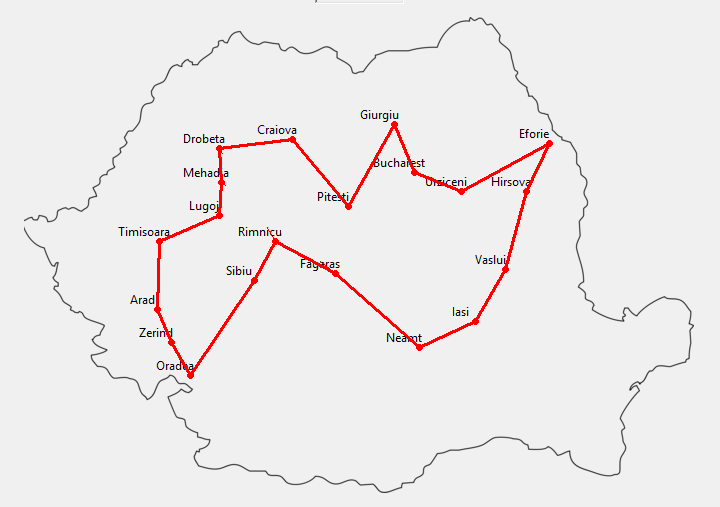

"source": [

"The solution looks like this.\n",

"It is not difficult to see why this might be a good solution.\n",

"

\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## GENETIC ALGORITHM\n",

"\n",

"Genetic algorithms (or GA) are inspired by natural evolution and are particularly useful in optimization and search problems with large state spaces.\n",

"\n",

"Given a problem, algorithms in the domain make use of a *population* of solutions (also called *states*), where each solution/state represents a feasible solution. At each iteration (often called *generation*), the population gets updated using methods inspired by biology and evolution, like *crossover*, *mutation* and *natural selection*."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Overview\n",

"\n",

"A genetic algorithm works in the following way:\n",

"\n",

"1) Initialize random population.\n",

"\n",

"2) Calculate population fitness.\n",

"\n",

"3) Select individuals for mating.\n",

"\n",

"4) Mate selected individuals to produce new population.\n",

"\n",

" * Random chance to mutate individuals.\n",

"\n",

"5) Repeat from step 2) until an individual is fit enough or the maximum number of iterations was reached."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Glossary\n",

"\n",

"Before we continue, we will lay the basic terminology of the algorithm.\n",

"\n",

"* Individual/State: A list of elements (called *genes*) that represent possible solutions.\n",

"\n",

"* Population: The list of all the individuals/states.\n",

"\n",

"* Gene pool: The alphabet of possible values for an individual's genes.\n",

"\n",

"* Generation/Iteration: The number of times the population will be updated.\n",

"\n",

"* Fitness: An individual's score, calculated by a function specific to the problem."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

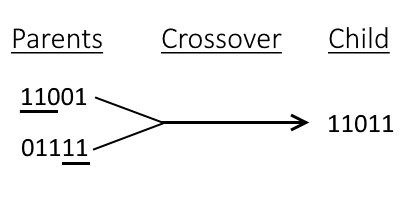

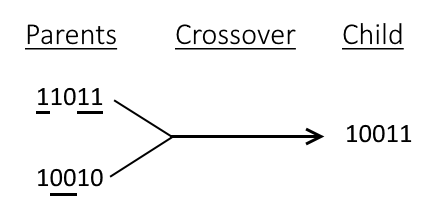

"### Crossover\n",

"\n",

"Two individuals/states can \"mate\" and produce one child. This offspring bears characteristics from both of its parents. There are many ways we can implement this crossover. Here we will take a look at the most common ones. Most other methods are variations of those below.\n",

"\n",

"* Point Crossover: The crossover occurs around one (or more) point. The parents get \"split\" at the chosen point or points and then get merged. In the example below we see two parents get split and merged at the 3rd digit, producing the following offspring after the crossover.\n",

"\n",

"\n",

"\n",

"* Uniform Crossover: This type of crossover chooses randomly the genes to get merged. Here the genes 1, 2 and 5 were chosen from the first parent, so the genes 3, 4 were added by the second parent.\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Mutation\n",

"\n",

"When an offspring is produced, there is a chance it will mutate, having one (or more, depending on the implementation) of its genes altered.\n",

"\n",

"For example, let's say the new individual to undergo mutation is \"abcde\". Randomly we pick to change its third gene to 'z'. The individual now becomes \"abzde\" and is added to the population."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Selection\n",

"\n",

"At each iteration, the fittest individuals are picked randomly to mate and produce offsprings. We measure an individual's fitness with a *fitness function*. That function depends on the given problem and it is used to score an individual. Usually the higher the better.\n",

"\n",

"The selection process is this:\n",

"\n",

"1) Individuals are scored by the fitness function.\n",

"\n",

"2) Individuals are picked randomly, according to their score (higher score means higher chance to get picked). Usually the formula to calculate the chance to pick an individual is the following (for population *P* and individual *i*):\n",

"\n",

"$$ chance(i) = \\dfrac{fitness(i)}{\\sum_{k \\, in \\, P}{fitness(k)}} $$"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Implementation\n",

"\n",

"Below we look over the implementation of the algorithm in the `search` module.\n",

"\n",

"First the implementation of the main core of the algorithm:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(genetic_algorithm)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The algorithm takes the following input:\n",

"\n",

"* `population`: The initial population.\n",

"\n",

"* `fitness_fn`: The problem's fitness function.\n",

"\n",

"* `gene_pool`: The gene pool of the states/individuals. By default 0 and 1.\n",

"\n",

"* `f_thres`: The fitness threshold. If an individual reaches that score, iteration stops. By default 'None', which means the algorithm will not halt until the generations are ran.\n",

"\n",

"* `ngen`: The number of iterations/generations.\n",

"\n",

"* `pmut`: The probability of mutation.\n",

"\n",

"The algorithm gives as output the state with the largest score."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"For each generation, the algorithm updates the population. First it calculates the fitnesses of the individuals, then it selects the most fit ones and finally crosses them over to produce offsprings. There is a chance that the offspring will be mutated, given by `pmut`. If at the end of the generation an individual meets the fitness threshold, the algorithm halts and returns that individual.\n",

"\n",

"The function of mating is accomplished by the method `recombine`:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(recombine)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The method picks at random a point and merges the parents (`x` and `y`) around it.\n",

"\n",

"The mutation is done in the method `mutate`:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(mutate)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We pick a gene in `x` to mutate and a gene from the gene pool to replace it with.\n",

"\n",

"To help initializing the population we have the helper function `init_population`\":"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(init_population)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The function takes as input the number of individuals in the population, the gene pool and the length of each individual/state. It creates individuals with random genes and returns the population when done."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Explanation\n",

"\n",

"Before we solve problems using the genetic algorithm, we will explain how to intuitively understand the algorithm using a trivial example.\n",

"\n",

"#### Generating Phrases\n",

"\n",

"In this problem, we use a genetic algorithm to generate a particular target phrase from a population of random strings. This is a classic example that helps build intuition about how to use this algorithm in other problems as well. Before we break the problem down, let us try to brute force the solution. Let us say that we want to generate the phrase \"genetic algorithm\". The phrase is 17 characters long. We can use any character from the 26 lowercase characters and the space character. To generate a random phrase of length 17, each space can be filled in 27 ways. So the total number of possible phrases is\n",

"\n",

"$$ 27^{17} = 2153693963075557766310747 $$\n",

"\n",

"which is a massive number. If we wanted to generate the phrase \"Genetic Algorithm\", we would also have to include all the 26 uppercase characters into consideration thereby increasing the sample space from 27 characters to 53 characters and the total number of possible phrases then would be\n",

"\n",

"$$ 53^{17} = 205442259656281392806087233013 $$\n",

"\n",

"If we wanted to include punctuations and numerals into the sample space, we would have further complicated an already impossible problem. Hence, brute forcing is not an option. Now we'll apply the genetic algorithm and see how it significantly reduces the search space. We essentially want to *evolve* our population of random strings so that they better approximate the target phrase as the number of generations increase. Genetic algorithms work on the principle of Darwinian Natural Selection according to which, there are three key concepts that need to be in place for evolution to happen. They are:\n",

"\n",

"* **Heredity**: There must be a process in place by which children receive the properties of their parents.

\n",

"For this particular problem, two strings from the population will be chosen as parents and will be split at a random index and recombined as described in the `recombine` function to create a child. This child string will then be added to the new generation.\n",

"\n",

"\n",

"* **Variation**: There must be a variety of traits present in the population or a means with which to introduce variation.

If there is no variation in the sample space, we might never reach the global optimum. To ensure that there is enough variation, we can initialize a large population, but this gets computationally expensive as the population gets larger. Hence, we often use another method called mutation. In this method, we randomly change one or more characters of some strings in the population based on a predefined probability value called the mutation rate or mutation probability as described in the `mutate` function. The mutation rate is usually kept quite low. A mutation rate of zero fails to introduce variation in the population and a high mutation rate (say 50%) is as good as a coin flip and the population fails to benefit from the previous recombinations. An optimum balance has to be maintained between population size and mutation rate so as to reduce the computational cost as well as have sufficient variation in the population.\n",

"\n",

"\n",

"* **Selection**: There must be some mechanism by which some members of the population have the opportunity to be parents and pass down their genetic information and some do not. This is typically referred to as \"survival of the fittest\".

\n",

"There has to be some way of determining which phrases in our population have a better chance of eventually evolving into the target phrase. This is done by introducing a fitness function that calculates how close the generated phrase is to the target phrase. The function will simply return a scalar value corresponding to the number of matching characters between the generated phrase and the target phrase."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Before solving the problem, we first need to define our target phrase."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"target = 'Genetic Algorithm'"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"We then need to define our gene pool, i.e the elements which an individual from the population might comprise of. Here, the gene pool contains all uppercase and lowercase letters of the English alphabet and the space character."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# The ASCII values of uppercase characters ranges from 65 to 91\n",

"u_case = [chr(x) for x in range(65, 91)]\n",

"# The ASCII values of lowercase characters ranges from 97 to 123\n",

"l_case = [chr(x) for x in range(97, 123)]\n",

"\n",

"gene_pool = []\n",

"gene_pool.extend(u_case) # adds the uppercase list to the gene pool\n",

"gene_pool.extend(l_case) # adds the lowercase list to the gene pool\n",

"gene_pool.append(' ') # adds the space character to the gene pool"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We now need to define the maximum size of each population. Larger populations have more variation but are computationally more expensive to run algorithms on."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"max_population = 100"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"As our population is not very large, we can afford to keep a relatively large mutation rate."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"mutation_rate = 0.07 # 7%"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Great! Now, we need to define the most important metric for the genetic algorithm, i.e the fitness function. This will simply return the number of matching characters between the generated sample and the target phrase."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def fitness_fn(sample):\n",

" # initialize fitness to 0\n",

" fitness = 0\n",

" for i in range(len(sample)):\n",

" # increment fitness by 1 for every matching character\n",

" if sample[i] == target[i]:\n",

" fitness += 1\n",

" return fitness"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Before we run our genetic algorithm, we need to initialize a random population. We will use the `init_population` function to do this. We need to pass in the maximum population size, the gene pool and the length of each individual, which in this case will be the same as the length of the target phrase."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"population = init_population(max_population, gene_pool, len(target))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We will now define how the individuals in the population should change as the number of generations increases. First, the `select` function will be run on the population to select *two* individuals with high fitness values. These will be the parents which will then be recombined using the `recombine` function to generate the child."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"parents = select(2, population, fitness_fn) "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"# The recombine function takes two parents as arguments, so we need to unpack the previous variable\n",

"child = recombine(*parents)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Next, we need to apply a mutation according to the mutation rate. We call the `mutate` function on the child with the gene pool and mutation rate as the additional arguments."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"child = mutate(child, gene_pool, mutation_rate)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The above lines can be condensed into\n",

"\n",

"`child = mutate(recombine(*select(2, population, fitness_fn)), gene_pool, mutation_rate)`\n",

"\n",

"And, we need to do this `for` every individual in the current population to generate the new population."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"population = [mutate(recombine(*select(2, population, fitness_fn)), gene_pool, mutation_rate) for i in range(len(population))]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The individual with the highest fitness can then be found using the `max` function."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"current_best = max(population, key=fitness_fn)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Let's print this out"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"print(current_best)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We see that this is a list of characters. This can be converted to a string using the join function"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"current_best_string = ''.join(current_best)\n",

"print(current_best_string)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We now need to define the conditions to terminate the algorithm. This can happen in two ways\n",

"1. Termination after a predefined number of generations\n",

"2. Termination when the fitness of the best individual of the current generation reaches a predefined threshold value.\n",

"\n",

"We define these variables below"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"ngen = 1200 # maximum number of generations\n",

"# we set the threshold fitness equal to the length of the target phrase\n",

"# i.e the algorithm only terminates whne it has got all the characters correct \n",

"# or it has completed 'ngen' number of generations\n",

"f_thres = len(target)"

]

},

{

"cell_type": "markdown",

"metadata": {

"collapsed": true

},

"source": [

"To generate `ngen` number of generations, we run a `for` loop `ngen` number of times. After each generation, we calculate the fitness of the best individual of the generation and compare it to the value of `f_thres` using the `fitness_threshold` function. After every generation, we print out the best individual of the generation and the corresponding fitness value. Lets now write a function to do this."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def genetic_algorithm_stepwise(population, fitness_fn, gene_pool=[0, 1], f_thres=None, ngen=1200, pmut=0.1):\n",

" for generation in range(ngen):\n",

" population = [mutate(recombine(*select(2, population, fitness_fn)), gene_pool, pmut) for i in range(len(population))]\n",

" # stores the individual genome with the highest fitness in the current population\n",

" current_best = ''.join(max(population, key=fitness_fn))\n",

" print(f'Current best: {current_best}\\t\\tGeneration: {str(generation)}\\t\\tFitness: {fitness_fn(current_best)}\\r', end='')\n",

" \n",

" # compare the fitness of the current best individual to f_thres\n",

" fittest_individual = fitness_threshold(fitness_fn, f_thres, population)\n",

" \n",

" # if fitness is greater than or equal to f_thres, we terminate the algorithm\n",

" if fittest_individual:\n",

" return fittest_individual, generation\n",

" return max(population, key=fitness_fn) , generation "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The function defined above is essentially the same as the one defined in `search.py` with the added functionality of printing out the data of each generation."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"psource(genetic_algorithm)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We have defined all the required functions and variables. Let's now create a new population and test the function we wrote above."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"population = init_population(max_population, gene_pool, len(target))\n",

"solution, generations = genetic_algorithm_stepwise(population, fitness_fn, gene_pool, f_thres, ngen, mutation_rate)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The genetic algorithm was able to converge!\n",

"We implore you to rerun the above cell and play around with `target, max_population, f_thres, ngen` etc parameters to get a better intuition of how the algorithm works. To summarize, if we can define the problem states in simple array format and if we can create a fitness function to gauge how good or bad our approximate solutions are, there is a high chance that we can get a satisfactory solution using a genetic algorithm. \n",

"- There is also a better GUI version of this program `genetic_algorithm_example.py` in the GUI folder for you to play around with."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Usage\n",

"\n",

"Below we give two example usages for the genetic algorithm, for a graph coloring problem and the 8 queens problem.\n",

"\n",

"#### Graph Coloring\n",

"\n",

"First we will take on the simpler problem of coloring a small graph with two colors. Before we do anything, let's imagine how a solution might look. First, we have to represent our colors. Say, 'R' for red and 'G' for green. These make up our gene pool. What of the individual solutions though? For that, we will look at our problem. We stated we have a graph. A graph has nodes and edges, and we want to color the nodes. Naturally, we want to store each node's color. If we have four nodes, we can store their colors in a list of genes, one for each node. A possible solution will then look like this: ['R', 'R', 'G', 'R']. In the general case, we will represent each solution with a list of chars ('R' and 'G'), with length the number of nodes.\n",

"\n",

"Next we need to come up with a fitness function that appropriately scores individuals. Again, we will look at the problem definition at hand. We want to color a graph. For a solution to be optimal, no edge should connect two nodes of the same color. How can we use this information to score a solution? A naive (and ineffective) approach would be to count the different colors in the string. So ['R', 'R', 'R', 'R'] has a score of 1 and ['R', 'R', 'G', 'G'] has a score of 2. Why that fitness function is not ideal though? Why, we forgot the information about the edges! The edges are pivotal to the problem and the above function only deals with node colors. We didn't use all the information at hand and ended up with an ineffective answer. How, then, can we use that information to our advantage?\n",

"\n",

"We said that the optimal solution will have all the edges connecting nodes of different color. So, to score a solution we can count how many edges are valid (aka connecting nodes of different color). That is a great fitness function!\n",

"\n",

"Let's jump into solving this problem using the `genetic_algorithm` function."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First we need to represent the graph. Since we mostly need information about edges, we will just store the edges. We will denote edges with capital letters and nodes with integers:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"edges = {\n",

" 'A': [0, 1],\n",

" 'B': [0, 3],\n",

" 'C': [1, 2],\n",

" 'D': [2, 3]\n",

"}"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Edge 'A' connects nodes 0 and 1, edge 'B' connects nodes 0 and 3 etc.\n",

"\n",

"We already said our gene pool is 'R' and 'G', so we can jump right into initializing our population. Since we have only four nodes, `state_length` should be 4. For the number of individuals, we will try 8. We can increase this number if we need higher accuracy, but be careful! Larger populations need more computating power and take longer. You need to strike that sweet balance between accuracy and cost (the ultimate dilemma of the programmer!)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"population = init_population(8, ['R', 'G'], 4)\n",

"print(population)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"We created and printed the population. You can see that the genes in the individuals are random and there are 8 individuals each with 4 genes.\n",

"\n",

"Next we need to write our fitness function. We previously said we want the function to count how many edges are valid. So, given a coloring/individual `c`, we will do just that:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"def fitness(c):\n",

" return sum(c[n1] != c[n2] for (n1, n2) in edges.values())"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Great! Now we will run the genetic algorithm and see what solution it gives."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"collapsed": true

},

"outputs": [],

"source": [

"solution = genetic_algorithm(population, fitness, gene_pool=['R', 'G'])\n",

"print(solution)"

]

},